Google's fleet of robotic Toyota Priuses has now logged more than 190,000 miles (about 300,000 kilometers), driving in city traffic, busy highways, and mountainous roads with only occasional human intervention. The project is still far from becoming commercially viable, but Google has set up a demonstration system on its campus, using driverless golf carts, which points to how the technology could change transportation even in the near future.

Stanford University professor Sebastian Thrun, who guides the project, and Google engineer Chris Urmson discussed these and other details in a keynote speech at the IEEE International Conference on Intelligent Robots and Systems in San Francisco last month.

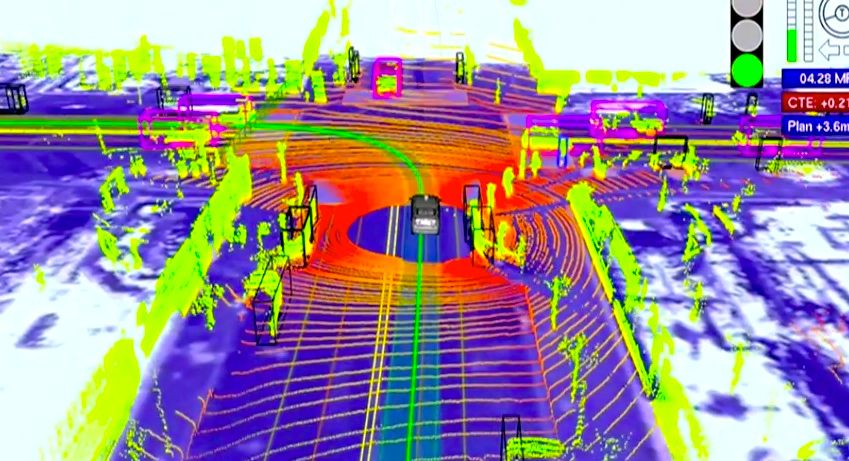

Thrun and Urmson explained how the car works and showed videos of the road tests, including footage of what the on-board computer "sees" and how it detects other vehicles, pedestrians, and traffic lights.

Google has released details and videos of the project before, but this is the first time HSIT have seen some of this footage -- and it's impressive. It actually changed the view of the whole project, which HSIT used to consider a bit far-fetched. Now we think this technology could really help to achieve some of the goals Thrun has in sight: Reducing road accidents, congestion, and fuel consumption.

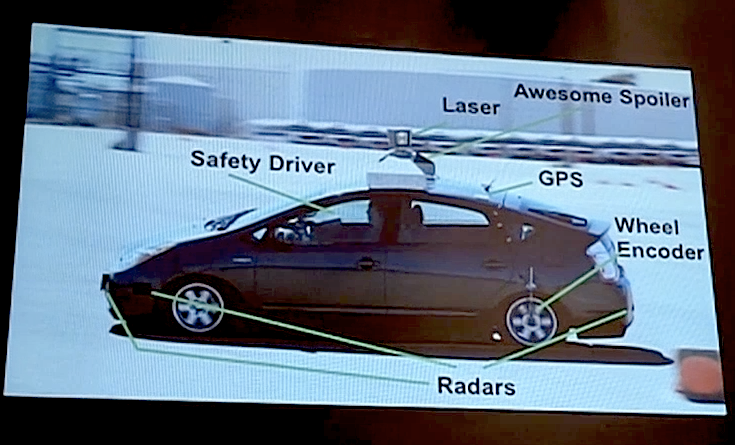

Urmson, who is the tech lead for the project, said that the "heart of our system" is a laser range finder mounted on the roof of the car. The device, a Velodyne 64-beam laser, generates a detailed 3D map of the environment. The car then combines the laser measurements with high-resolution maps of the world, producing different types of data models that allow it to drive itself while avoiding obstacles and respecting traffic laws.

The vehicle also carries other sensors, which include: four radars, mounted on the front and rear bumpers, that allow the car to "see" far enough to be able to deal with fast traffic on freeways; a camera, positioned near the rear-view mirror, that detects traffic lights; and a GPS, inertial measurement unit, and wheel encoder, that determine the vehicle's location and keep track of its movements.

Here's a slide showing the different subsystems (the camera is not shown):

Two things seem particularly interesting about Google's approach. First, it relies on very detailed maps of the roads and terrain, something that Urmson said is essential to determine accurately where the car is. Using GPS-based techniques alone, he said, the location could be off by several meters.

The second thing is that, before sending the self-driving car on a road test, Google engineers drive along the route one or more times to gather data about the environment. When it's the autonomous vehicle's turn to drive itself, it compares the data it is acquiring to the previously recorded data, an approach that is useful to differentiate pedestrians from stationary objects like poles and mailboxes.

The video above shows the results. At one point you can see the car stopping at an intersection. After the light turns green, the car starts a left turn, but there are pedestrians crossing. No problem: It yields to the pedestrians, and even to a guy who decides to cross at the last minute.

Sometimes, however, the car has to be more "aggressive." When going through a four-way intersection, for example, it yields to other vehicles based on road rules; but if other cars don't reciprocate, it advances a bit to show to the other drivers its intention. Without programming that kind of behavior, Urmson said, it would be impossible for the robot car to drive in the real world.

Clearly, the Google engineers are having a lot of fun (fast forward to 13:00 to see Urmson smiling broadly as the car speeds through Google's parking lot, the tires squealing at every turn).

But the project has a serious side. Thrun and his Google colleagues, including co-founders Larry Page and Sergey Brin, are convinced that smarter vehicles could help make transportation safer and more efficient: Cars would drive closer to each other, making better use of the 80 percent to 90 percent of empty space on roads, and also form speedy convoys on freeways.

They would react faster than humans to avoid accidents, potentially saving thousands of lives. Making vehicles smarter will require lots of computing power and data, and that's why it makes sense for Google to back the project, Thrun said in his keynote.

Urmson described another scenario they envision: Vehicles would become a shared resource, a service that people would use when needed. You'd just tap on your smartphone, and an autonomous car would show up where you are, ready to drive you anywhere. You'd just sit and relax or do work.

He said they put together a video showing a concept called Caddy Beta that demonstrates the idea of shared vehicles -- in this case, a fleet of autonomous golf carts. He said the golf carts are much simpler than the Priuses in terms of on-board sensors and computers. In fact, the carts communicate with sensors in the environment to determined their location and "see" the incoming traffic.

"This is one way we see in the future this technology can . . . actually make transportation better, make it more efficient," Urmson said.

Thrun and Urmson acknowledged that there are many challenges ahead, including improving the reliability of the cars and addressing daunting legal and liability issues. But they are optimistic (Nevada recently became the first U.S. state to make self-driving cars legal.) All the problems of transportation that people see as a huge waste, "we see that as an opportunity," Thrun said.

No comments:

Post a Comment